Defaulty Credit Card Data

For my most recent data science project, I decided to take a look at credit card default rates. More specifically, my "business case" was that I had been hired by a bank to help them predict credit card default. While the data was not nearly as interesting as some that I've explored earlier, the data science process itself has suddenly gotten far more exciting. Machine learning is the topic I've been waiting for since day one of Flatiron, and this first project leveraging its usefulness did not disappoint. I'd like to walk through some of the more interesting parts of the project and discuss what I'm eager to learn more about.

In order to provide a good enough point of reference for the rest of the project highlight reel, I should mention a bit about the data. This was a binary classification problem, and the target was a variable called 'default' that could be zero or one (with the latter indicating that customer had defaulted). There was a somewhat wide range of data, including non financial categorical values like marital status, age, and education. There was also what amounted to be a six month chunk of credit card bills and payments, which I think would have been much more fun to analyze alongside each other. Surprisingly though, those two factors had a very minor effect on the models, and in fact the bill values (besides the most recent one) were deemed the least important values in my entire data set by the Lasso regression.

That brings me to my first highlight. Lasso regression is a really interesting way to perform feature selection and I found myself using it as my method of choice. Once I had written a function for it and could use it on the fly while returning a list with all of the zero coefficients it made feature selection incredibly easy and satisfying. It is definitely a bit frustrating though when it changes every single coefficient to zero because alpha was too high.

Back to the bills and payments for a moment though, I was so eager to use them that before I dropped the bill data lasso had deemed worthless, I tried a bit of feature engineering to make a value showcasing the percentage of a credit card bill was being paid each month. It looked like a great addition and it seemed logical that these percentages would have some kind of relationship with defaulting, but the very next lasso regression told me that they all had to go. What a waste.

I left out the third group when I mentioned the data earlier, and this one was just the worst. This group contained 'default' as well as six months worth of columns representing customers' payment status with the bank. Considering the fact that 'default' is also a payment status with the bank it's no surprise that these values had an extremely tight relationship, to the point where it annoyingly squished all of the other values. If I remember correctly it was around 3 times the prediction power of the second place value,

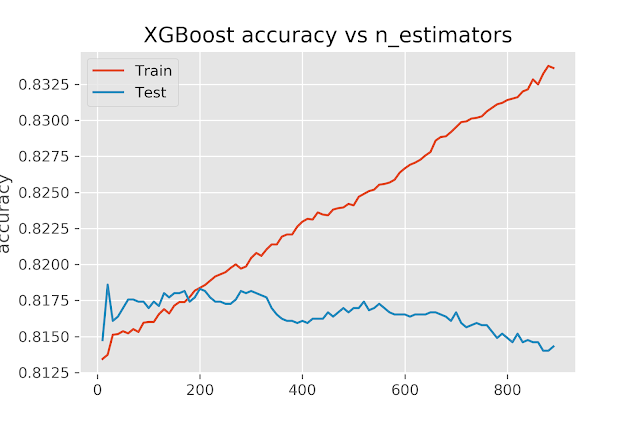

Once the data processing and scaling and resampling was complete it was time to start the real fun though. There is something inherently satisfying about leaving your computer while it works on some data for you in the background. I think there is also something to be said about the anticipation of waiting for the model to finish running and print your accuracy. There is definitely a counterargument against my enjoyment of walking away while the computer chugs along though, and I got to see plenty of that as well. Too many times I came back to see that an error had occurred and I'd actually just walked away from the computer while it did nothing at all, and now I'd have to wait out another 20 minutes or more. But those 20 minutes are worth it when you can graph while optimizing your hyperparameters, see below for a couple of examples.

Another factor that has really pulled me into machine learning and helped my enjoyment of it is the sheer variety of estimators and models and whatnot. I'm really looking forward to becoming more fluent in these. It seems like there is always some secret little trick that can be used to improve performance and I can't wait to start figuring these things out for myself.

As for the prediction itself from the project, I was actually a bit disappointed. I ended up around 5% higher in terms of accuracy than the baseline model and I had thought there would be a slightly larger jump than that. I'm still not sure how to set my expectations for this type of work, and judging by all of the articles I've seen about "when is a model good enough" or "what accuracy should I be aiming for" it seems like this isn't uncommon. In the end though, mine came down to RandomForest and XGBoost, and I still can't believe how close their scores ended up.

Comments

Post a Comment