Stock Market Predictions and Visualizations

The capstone project has finally come around, and for this blog post I'll be discussing my project as well as the steps I took to get there. I worked with stock market data this time around. Unlike most of the previous projects, I didn't set out to answer a super specific question based on the data as my goal. Instead, I wanted to build something that would make analyzing the dataset simple and intuitive with just a few clicks. I set out to make something extremely interactive, containing a huge amount of information. I also wanted to include two topics in finance that interest me, yield rates and economic sectors. Of course this is still a data science project, so there would also have to be an emphasis on machine learning and how it could be used to predict future prices.

To begin, I'll briefly explain the data that I used for this project. To start with there were two datasets from the main US stock exchanges (NYSE and NASDAQ) which contained categorical data like stock names, symbols, sectors, and market capitalization. I combined these to form a single list containing every stock symbol that I wanted to include in my analysis. Using this, I obtained my primary dataset. This was done by using the list of symbols as input into a function that scraped Yahoo Finance for historical data. This new dataset was a one year time series, and it contained daily values for each stock such as high, low, open, and close prices. Last, I pulled some daily yield values from the US Treasury website.

Then I moved on to the data cleaning and feature engineering stage, which for this project was quite extensive. First, there were some categorical columns on the stock exchange dataset that I wanted to add to the time series, namely sector and market capitalization. The reason I added sectors was because I planned on using them in my modeling as well as my visualizations, and I needed the market cap values in order to narrow down the very large dataset. I did this by filtering out any stocks too small to be considered 'mid-cap.' This is generally defined as companies valued between two and ten billion dollars, so any stocks with a market cap under two billion were removed from the dataset. Next, I indexed the dataset using a multi-level index, so that each row had an index composed of a stock symbol and a date. I also filtered out any stocks that had started trading within the past year since I was already working with a fairly short time frame and including any shorter ones would complicate my analysis without adding any real value.

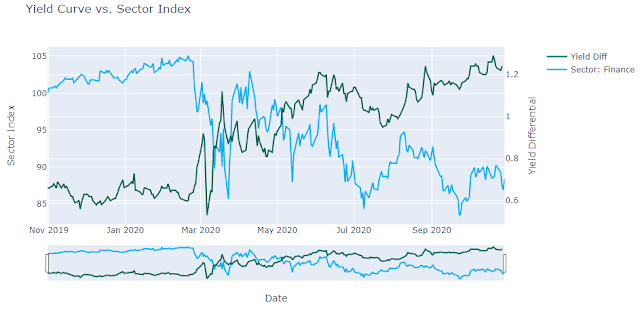

I then moved on to some simple feature engineering. I added a column showing a one week moving average so that I would have an easy way of identifying short term trends in price movement. I also included one showing the percentage change in price over the previous day. Finally, I added one last column showing the intraday spread between the high and low values. My thought process was that this would be a simple but effective way to measure volatility. Then I created a new dataframe very similar to the stock time series, but using sector averages instead of stocks. On this frame I added a 'sector index' of sorts which started at one hundred for each sector and then moved according to the average percentage change of the sector. Since they all started at the same point, they would inevitably spread out and show a clear picture of which sectors were outperforming and which were underperforming. I ended up including this graph in the interface I built, seen below.

Moving on to the final datasets which contained yield data, I started by removing any dates that fell outside the timeframe I was examining. Then I added two columns representing two different yield spreads which are used in finance to roughly represent the shape of the yield curve. For reference, this curve is the shape made when plotting interest rates against the time periods they represent. In this case I used the most common spreads which are the difference between ten year and two year interest rates and the difference between thirty year and five year interest rates. The lower the difference between these values, the flatter the yield curve is said to be, which is widely considered a strong economic indicator. Finally I moved on to my last piece of data cleaning, which was to create a custom frequency for the date values since the stock market isn't open everyday. Initially I used a built-in federal holiday calendar, but when I cross referenced those dates with the dates that were actually present in the data I found that the stock market is actually open on two of those holidays, and closed on a non-federal holiday. After making the proper adjustments though my custom frequency was good to go and I was finally ready to get started on the modeling.

I decided to take two separate approaches when creating a model for the data, as I was interested in whether one might outperform the other. The first model was an autoregressive moving average, or ARIMA. For this model, I decided that rather than tuning ARIMA parameters for each individual stock, I would optimize parameters based on each sector index I had created and then apply those parameters to all stocks within that sector. I had two main reasons for this choice. First, it saved a great deal of computation time, and since stocks tend to follow the general trends of their sectors I was likely not losing much accuracy in exchange. Second, single stocks are much more susceptible to unpredictable 'noise' in the data, while a sector average will usually smooth out that type of issue. I also went with an option I had never tried before for parameter selection. Rather than using a grid search like I normally would, I stumbled across a module called "pmdarima" which contains a function called auto_arima. From what I read it seems that this was originally a function in R, and the pmdarima developers have created this new function to serve approximately the same purpose in Python. It works by running some initial tests to determine the order of differencing, and then uses stepwise selection to determine the other two parameters. It also has a nice feature allowing the user to select which criterion they would like the function to use when evaluating the model, so I was able to choose based on the Akaike information criterion, which I prefer because it protects against both overfitting and underfitting. Once I had my parameters solved for each sector, I applied the each sector model to every stock within its sector. For the next model I went with a neural network known as LSTM or long short-term memory. This is especially useful when working with time series data to make predictions because it is able to remember values via feedback connections, unlike most other neural networks.

Once the modeling was complete it was time to work on the visualizations. This was especially important to my project because the nature of creating an interface basically demands that the visuals are clear and effective. In order to accomplish this, as well as make my graphs more interactive, I used a graphing library known as Plotly. This library allowed me to create graphs with labels that pop up when the mouse pointer is hovered over them (as seen above), as well as adding date sliders allowing the user to view a specific range. Since I also wanted the interface to be easy to use and intuitive, I implemented widgets, mostly in the form of drop-down boxes. Rather than typing in a line of code, the user can simply click on the drop-down box and make a selection, which will then update the graph with the selected values. I also implemented a drop-down that allows the user to switch between graphs, all without any coding. Another nice feature is that I could make selections dependent on each other, so that if for example the 'Technology' sector was selected, it would alter the list of stocks to show only technology stocks in the next drop-down.

Once the graphs and widgets were coded, it was time to put it all together. I will include a couple images below, as well as a link to the GitHub repository. Finally, I will leave a brief description of the graphs that I have implemented so far.

2. Stock vs. Sector w/ Table - Same as above, but also returns a table showing the predicted percent change in value for both the sector index and stock.

3. LSTM Forecasts - Plots the actual test value as well as the predicted value of selected stocks using LSTM. It also returns the root mean squared error. Can be compared to the One Step Ahead to see which model is more accurate with its predictions.

4. Compare Sectors - This graph shows the performance of each sector over the past year, with each index starting at 100. Hovering over it makes all of the names appear from highest to lowest at that particular date. Easy to see which sectors are performing well and which ones are not.

5. One Step Ahead Forecast - Plots the selected stock, along with its one step ahead prediction and confidence interval. Can be compared to the LSTM to give an idea of which model makes the better predictions.

6. Yield vs. Stock Value - Plots the stock of your choice along with your choice of yield curve. Makes it easy to see any potential relationships between stocks and yield spreads. Also has a date slider at the bottom.

7. Yield vs. Sector Value - Plots the sector index of your choice along with your choice of yield curve. Makes it easy to see any potential relationships between sector averages and yield spreads.

Comments

Post a Comment